Today we will speak a little about outbound HTTP connections, HTTP compression, Forefront TMG 2010 RC and its Malware Inspection and NIS(Network Inspection System, aka IPS).

By outbound HTTP connections we understand HTTP connections made by clients behind Forefront TMG 2010 RC to external web servers(to put it in a simple way).

For such clients, Forefront TMG 2010 RC can provide web antivirus/anti-malware protection and intrusion prevention against exploits of Microsoft vulnerabilities(for example Internet Explorer vulnerabilities).

Note that HTTPS-encrypted sessions can be inspected for viruses/malware or exploits if the HTTPS inspection is enabled and configured.

Now, there is one thing I’ve often seen being misunderstood, and I want to mention it: to compromise the integrity of a host behind a firewall it is not necessary to compromise the security of the firewall itself(perhaps the expression firewall is not the most fortunate here, maybe the UTM one would be).

It boils down to the information flow control exercised by the firewall.

Forefront TMG 2010 RC, like any of the firewalls out there, operates within certain limits. It can enforce security policies at different layers. It is essential for the Forefront TMG 2010 RC administrator to understand and divide the traffic flow from Forefront TMG 2010 RC’s perspective.

Bellow, we will talk about Forefront TMG 2010 RC firewall policies known as access rules, more specifically about access rules which allow outbound HTTP connections, outbound HTTP connections on which Forefront TMG 2010 RC will apply Malware Inspection and intrusion prevention. These policies will “dictate” under what conditions information can be exchanged.

When implementing web antivirus/anti-malware protection and intrusion prevention at the gateway level(on not only), Forefront TMG 2010 RC bellow, one aspect(among others) is key to the successful of the deployment of such a solution: minimize the ability of attackers to bypass the inspection engines(malware inspection, IPS, HTTP security filter).

As we already mentioned, to have this happen(bypass the inspection engines), the attackers do not necessarily have to compromise the security of the firewall itself, have it said in another way, do not have to hack the firewall, just to hack through the firewall. This is achievable knowing the information flow control levels between which the firewall operates.

For example, it may go down to abusing the protocol itself(HTTP in this case) and/or obfuscating the code that the client will execute. As you may have guessed bellow we will not speak about code obfuscation.

And regarding the protocol(HTTP), we will chat about HTTP compression.

If time will permit, in future posts, we will detail other IPS or Malware evasion techniques related to Forefront TMG 2010, and see if we can make Forefront TMG 2010 when web caching is enabled to become a malware distribution point for the clients behind it.

What is special about Forefront TMG 2010 RC is that includes a web proxy, so it can provide a comprehensive, yet strict, implementation of the HTTP protocol at the proxy level, compared to “regular deep inspection firewalls”.

However, HTTP can be a complex protocol, so a “full implementation” of the HTTP protocol on the web proxy might not be so easy to achieve.

Bellow we will speak about the forward web proxy scenario.

Now, regarding about the information flow control, if we have the Forefront TMG 2010 RC not understanding the HTTP traffic flow, the inspection engines might not function as desired.

Here is something important, two aspects we’ve mentioned so far: “knowing the information flow control levels between which the firewall operates” and “function as desired”.

Usually here things break, from two reasons: the vendor is at fault or the administrator(or the combination of both).

Sometimes it can be difficult to understand what exactly such a firewall(UTM) does(excluding those “special” cases cases when the vendor itself does not know what it does, or more exactly what it is supposed to not do ;) ).

And here is where the vendor can be at fault: inappropriate documentation can lead to “not function as desired” and from the administrator's point of view to “not knowing the information flow control levels between which the firewall operates”.

The vendor will not always be keen to show how can one bypass its inspection engines due to the inspection engines’ natural limitations. This may not be good advertising(the silver bullet sells much better). The problem that arise from here is that the administrator, when possible, will not configure appropriately the firewall policies to limit the attackers’ bypass capabilities due to not knowing exactly with what will have to deal.

The vendor can take some proactive steps regarding its inspection engines’ natural limitations, using a more strict protocol implementation. However, this approach is not always possible, as it may reflect in the functionality aspect, the firewall should be there to make sure the functionality aspect is not affected and not to be a point of DoS. Still, some logging details, well placed, can give to the administrator various hints in certain situations.

The administrator, on the other hand, can be at fault when it will not understand the firewall’s operations and the configuration process if it’s lazy and doesn’t read the manual/documentation made by the vendor available.

Here are some useful links regarding IPS bypassing:

http://www.nss.co.uk/certification/ips/nss-nips-v40-testproc.pdf

http://www.metasploit.com/data/confs/blackhat2006/blackhat2006-metasploit.pdf

http://www.cisco.com/web/about/security/intelligence/cwilliams-ips.html

Coming back to HTTP compression, outbound HTTP connections, Malware Inspection and NIS, we may have touched a couple of times this subject in the past.

HTTP compression is a nice way to maximize speed and to minimize bandwidth costs(usually HTTP/1.1 compression is achieved via the Accept-Encoding and Content-Encoding headers).

Typically HTTP compression is done either with gzip or deflate. HTML files, text files, external JavaScript files, etc. can be compressed.

Speaking about those HTTP headers, let’s take a look at them:

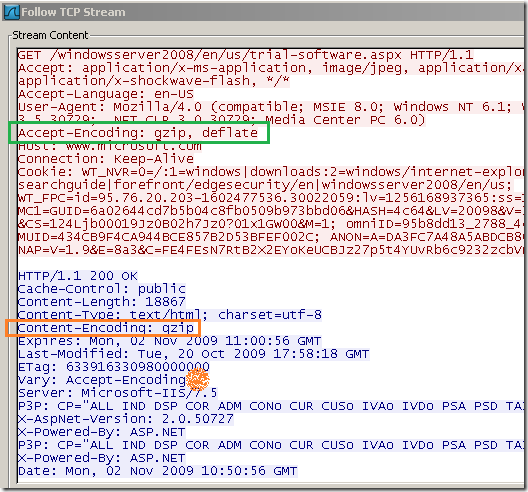

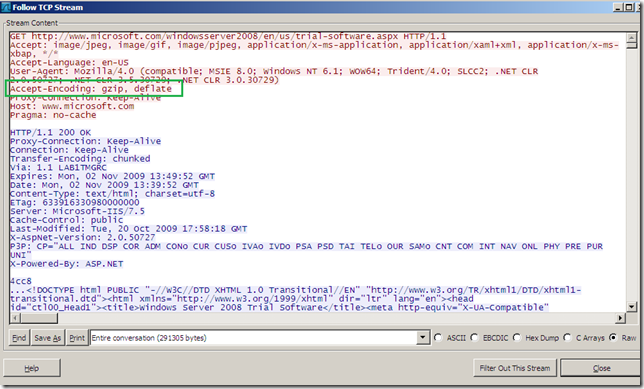

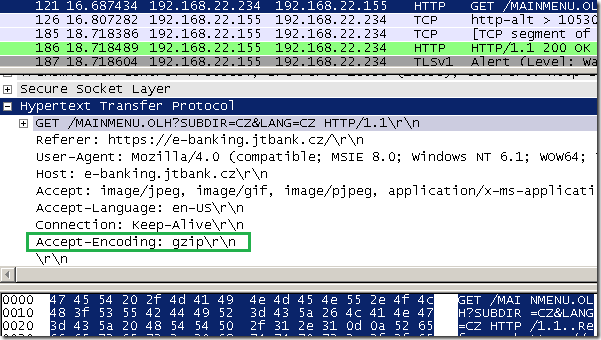

- the client(web browser, IE 8 bellow) sends the Accept-Encoding header, tells the server that it supports gzip and deflate compression. The client requested a web page bellow.

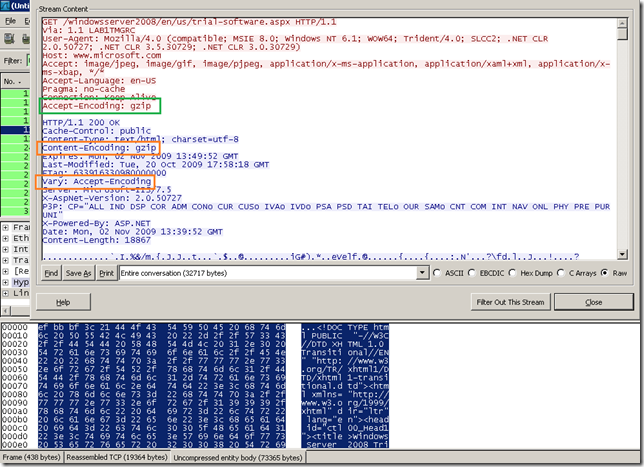

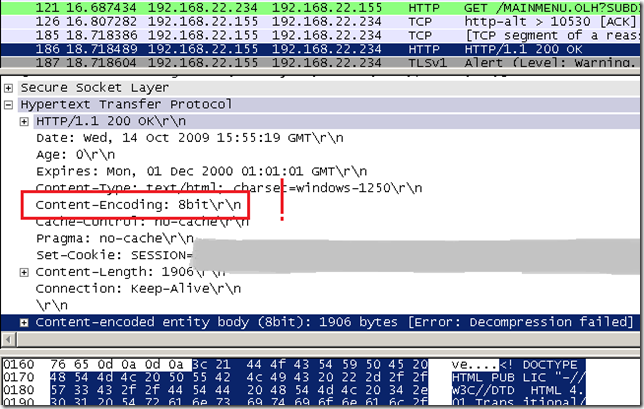

- the server(IIS 7.5 bellow), depending on its configuration, can compress certain files, bellow with the Content-Type header tells to the client the content type, and with the Content-Encoding header that the response was compressed with gzip. Also note that there is a Vary: Accept-Encoding HTTP header in the response, this is received by proxy servers and is used to identify that a cached response should be sent only to clients that send the appropriate Accept-Encoding request header. This prevents compressed content from being sent to a client that cannot support it(thus cannot understand it). We will not discuss bellow any caching issues related to compressed responses.

Note that Content-Encoding: gzip in server’s response can be used with Transfer-Encoding: chunked HTTP header. We will not discuss this bellow.

So as you can see, we already mentioned quite a few times the “we will not discuss this bellow” phrase(mostly in an attempt to keep things as simple as possible and avoid this post become too long).

Now, to divide the traffic flow, without thinking to any security aspects:

- the client(browser) issues a HTTP request for a web site. Pretty much all modern browsers append by default the Accept-Encoding header indicating they support gzip or deflate(typically both). Deflate may be somehow ambiguous in practice, depending by what RFC it will be implemented.

- the request reaches Forefront TMG 2010 RC, which depending on its configuration, may strip the Accept-Encoding header(no compression), or may set it to Accept-Encoding: gzip header.

- the web server, depending on its configuration, may reply with a response compressed with gzip Content-Encoding: gzip (the fortunate case).

- if the response is compressed with gzip, Forefront TMG 2010 RC will ungzip it, inspect it and, based on its configuration, will pass it uncompressed to the client, or compress it again with gzip and pass it compressed to the client.

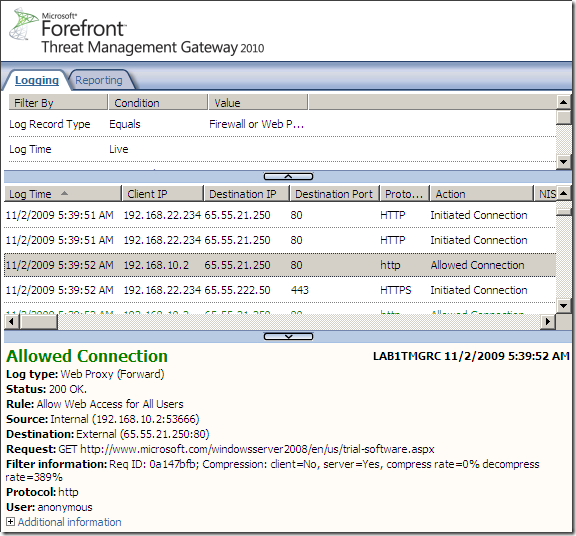

To picture this, when everybody plays nice, web proxy client behind TMG makes a request for a web page:

- how things look on the client side, no compression between client and TMG by default:

- how things look on the Forefront TMG 2010 RC side, TMG said it supports gzip compression and the server used gzip compression, so HTTP compression occurred between TMG and the server:

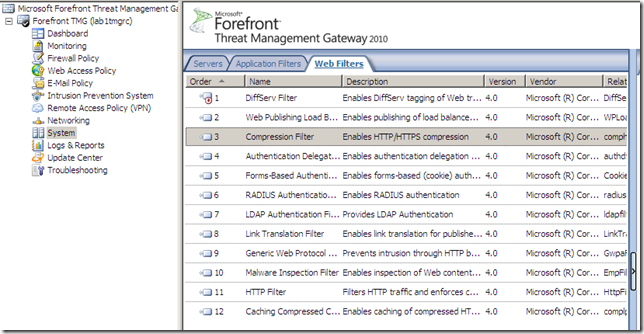

Let’s visualize the default HTTP compression settings on Forefront TMG 2010 RC:

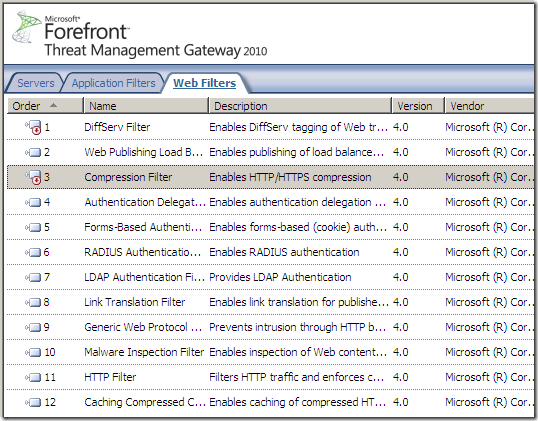

- the Compression Filter is enabled by default. According to this(refers to ISA Server 2006, but might be good info when it comes to Forefront TMG 2010 RC):

Compression Filter. This filter is responsible for compression and decompression of HTTP requests and responses. This filter has a high priority, and is high in the ordered list of Web filters. This is because the filter is responsible for decompression. Decompression must take place before any other Web filters inspect the content.

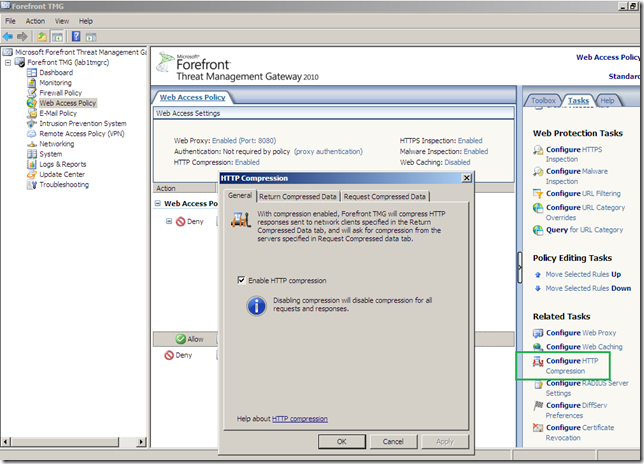

- HTTP compression is enabled by default:

- and by default Forefront TMG 2010 RC requests compressed HTTP content from the External network(meaning that if an external web server support gzip HTTP compression, this will occur between Forefront TMG 2010 RC and this web server, as we saw in the above example):

- also by default compression will not occur between the client behind Forefront TMG 2010 RC and Forefront TMG 2010 RC, just between Forefront TMG 2010 RC and the web server(if the web server supports HTTP compression) unless the server forces an unsupported compression method by TMG when TMG may pass directly to the client the compressed response.

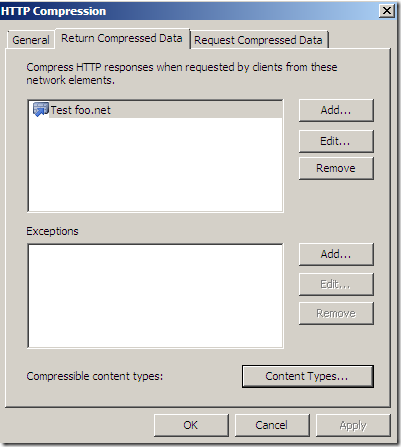

Usually the Return Compressed Data tab is used by web server publishing rules(when Forefront TMG 2010 RC acts as a reverse proxy publishing a web server behind it, and an external client or so might request HTTP compression, as can be noted from bellow I have such a test web server publishing rules, so when an external client will request HTTP compression, based on the Compressible content types configuration, HTTP compression can occur between this external client and Forefront TMG 2010 RC, saving bandwidth and maximizing speed if this is an Internet connection):

Time to sum up what we’ve have seen so far, and play a cheap game.

Split the information flow control in pieces, some security aspects are discussed this time:

- client behind Forefront TMG 2010 RC says it supports various compression schemes. –> possible attack point

- Forefront TMG 2010 RC’s compression filter, by default, only supports gzip compression. –> possible attack point

- what inspection engine has a dependency on the compression filter, thus can only decompress gziped server responses ? –> possible attack point

- if one inspection engine does not necessarily has a dependency on the compression filter, what type of HTTP compression does it support ? –> possible attack point

- what if, Forefront TMG 2010 RC does not request compression and the server returns a compressed response, or Forefront TMG 2010 RC requests gzip compression but the server responds with deflate ?

- Strict HTTP implementation on the proxy, obey the RFC or violate the RFC (if violation, who is doing that, the attacker or TMG and on what purpose) ? –> possible attack point

To jump straight to the matter, a two seconds cheap trick that makes pointless the Malware Inspection and NIS on Forefront TMG 2010 RC while HTTP compression default settings are on Forefront TMG 2010 RC(no HTTP filtering on the respective access rule):

- force a deflate compressed server response when Forefront TMG 2010 RC has requested gzip compression.

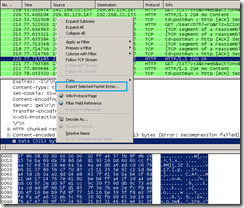

To picture this(note that I’m not using Metasploit to do this, although Metasploit implements the forced HTTP compression(gzip or deflate) trick):

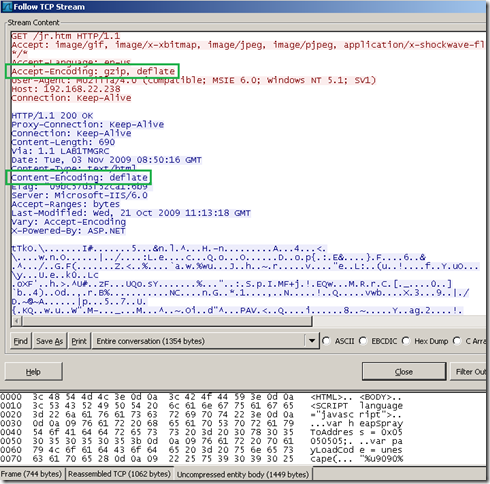

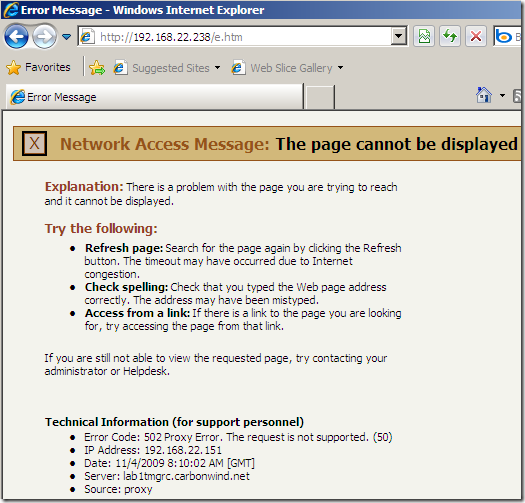

- this is how it looks on the client, note that the client(IE 6) supports both gzip and deflate, so it won’t be surprised when the server uses deflate.

- on the Forefront TMG 2010 RC side, note that TMG said it supports gzip, but the web server ignored this and used deflate.

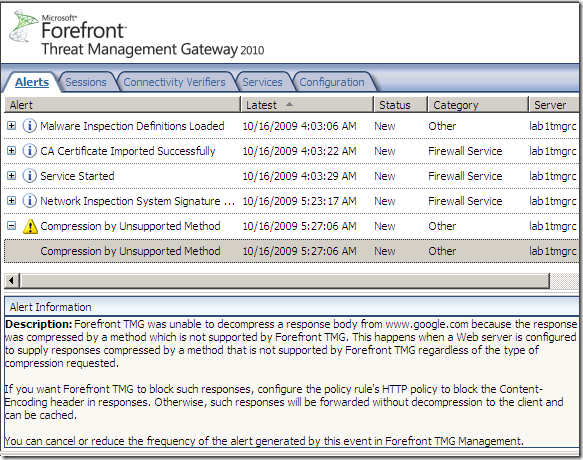

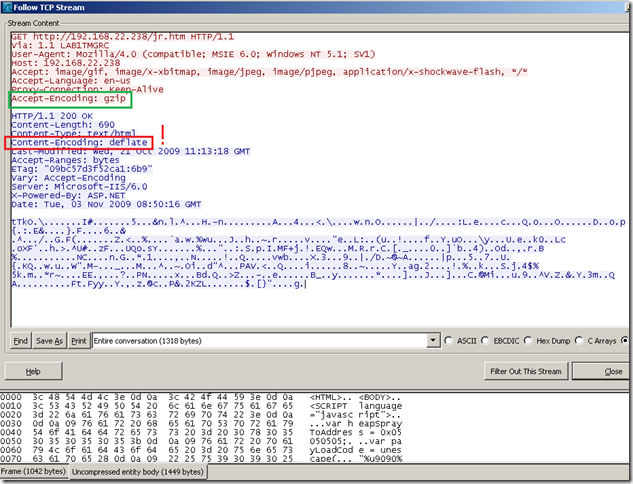

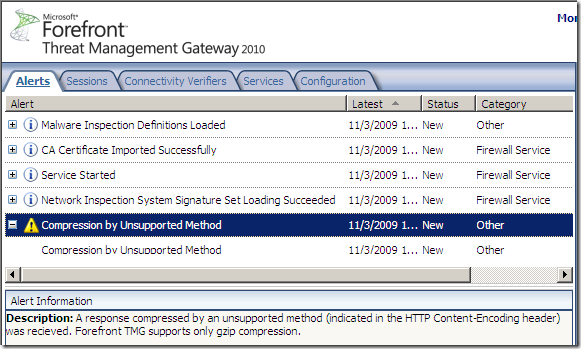

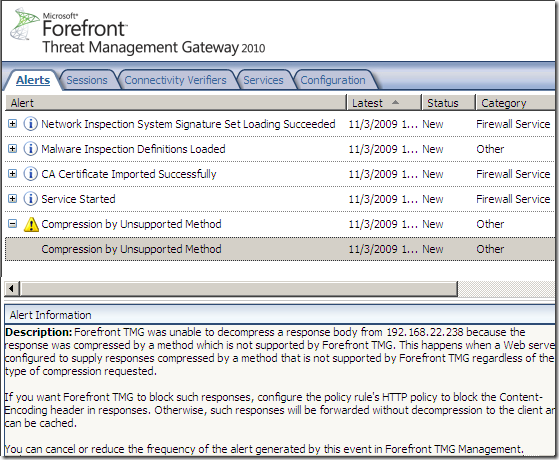

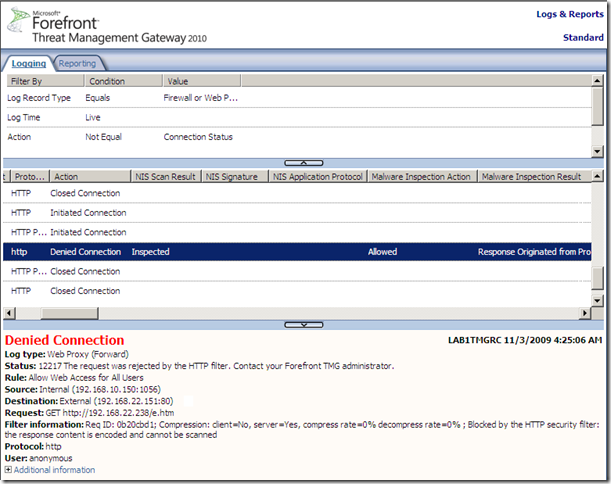

- which caused TMG to log this alert, saying(‘recieved’ :) ):

A response compressed by an unsupported method (indicated in the HTTP Content-Encoding header) was recieved. Forefront TMG supports only gzip compression.

and:

Forefront TMG was unable to decompress a response body from 192.168.22.238 because the response was compressed by a method which is not supported by Forefront TMG. This happens when a Web server is configured to supply responses compressed by a method that is not supported by Forefront TMG regardless of the type of compression requested.

If you want Forefront TMG to block such responses, configure the policy rule's HTTP policy to block the Content-Encoding header in responses. Otherwise, such responses will be forwarded without decompression to the client and can be cached.

You can cancel or reduce the frequency of the alert generated by this event in Forefront TMG Management.

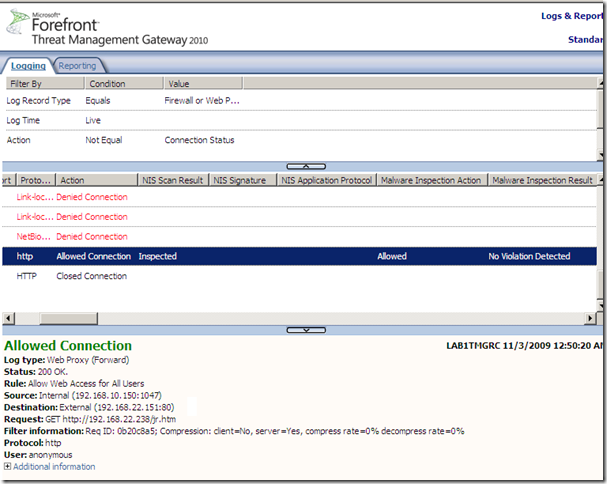

- but what was really important here: Forefront TMG 2010 RC allowed this connection, and the allowed web server response contained an exploit that both the Malware Inspection and NIS would have normally detected, as can be noted due to the compression trick this exploit went right through:

So to recap, why we got in this situation:

- first possible attack point: know your clients, in this case the HTTP compression methods they support, consult their documentation and/or use Wireshark to analyze their requests. It does not matter that a web server forces a certain compression method as long the client does not support this compression method, as the client won’t be able to decompress the response and will just print ambiguous characters(won’t execute the malicious code).

- pay attention to new HTTP compression methods that may be introduced with the installation of a plug-in, like Google Toolbar which can be installed in IE or Firefox. You may notice that after doing this, IE or Firefox may use SDCH sometimes(I’ve quickly pictured for you some details about SDCH bellow, as it may not be that easy to find details about it as writing on the web).

- it’s not about Accept-Encoding client header striping. The attacker can reply blindly, or take decisions based on the User-Agent(it’s not recommended to mangle this one, as this may affect the content returned by the web site).

- according to RFC 2616, Hypertext Transfer Protocol -- HTTP/1.1: If no Accept-Encoding field is present in a request, the server MAY assume that the client will accept any content coding.

http://www.w3.org/Protocols/rfc2616/rfc2616-sec14.html

This means that even if you strip the Accept-Encoding client header at the proxy level, the server, according to the RFC, can still use HTTP compression.

- playing by the RFC or not is not relevant to the attacker, if you set the desired HTTP compression at the proxy level(say gzip), the attacker is free to reply with deflate, whether it follows or not the RFC.

- automatically blocking unsupported Content-Encoding methods by the web proxy(Compression Filter) by default. Blocking methods that the proxy(Compression Filter) does not understand is desired from a security point of view but may not feasible from the functionality point of view when the proxy does not provide a method of white listing unsupported methods, see bellow.

- know what HTTP compression methods Forefront TMG 2010 RC supports, and what are the default settings.

The HTTP Compression filter on Forefront TMG 2010 RC supports by default gzip compression.

- know the relationship between the inspection engines on Forefront TMG 2010 RC and the HTTP Compression filter.

The NIS(aka IPS) depends on the HTTP Compression filter to decompress the traffic.

The Malware Inspection might not necessarily have this dependency, and it might be able to decompress itself gzipped responses. The Malware Inspection does not understand deflate HTTP compression as far as I saw.

The HTTP filter depends on the HTTP Compression filter to decompress the traffic, there is a gotcha here, see bellow.

- know what Forefront TMG 2010 RC does with a server response when the Content-Encoding header is not as expected.

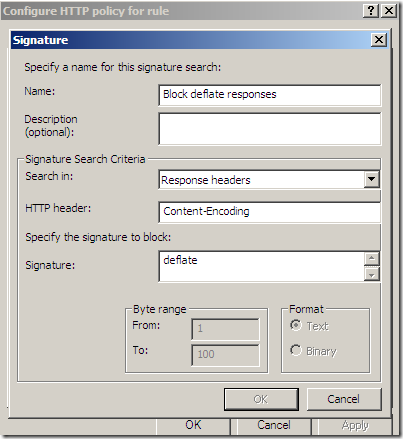

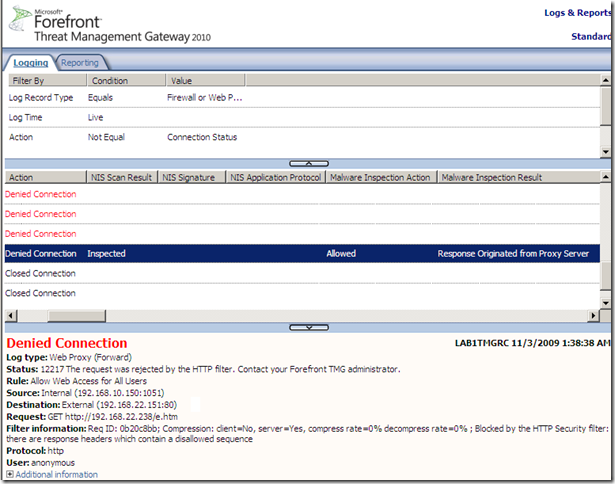

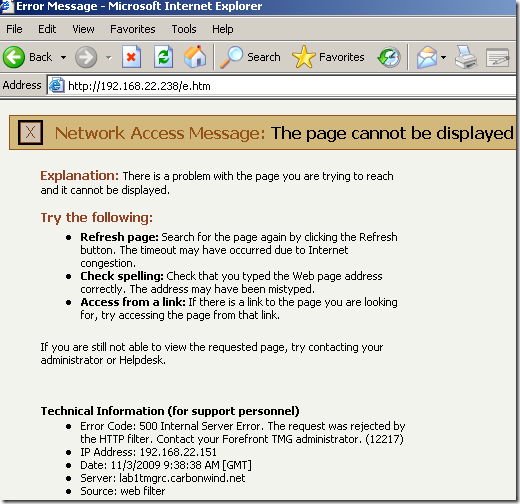

If Forefront TMG 2010 RC forwards responses compressed with HTTP compression methods not supported by Forefront TMG 2010 RC, but supported by the clients behind it, like deflate, configure the HTTP filter to block such responses. TMG will still log that alert, but it will block the server’s response, this will avoid the above example.

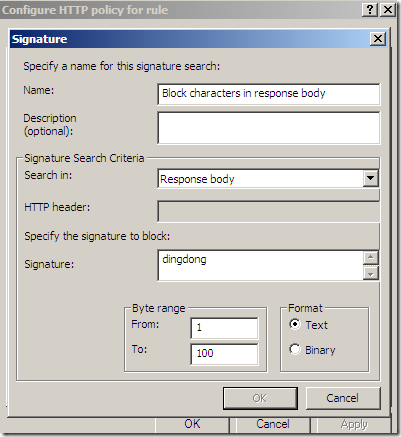

- if you configure the HTTP filter to block a signature in server’s response body, say something like bellow, then Forefront TMG 2010 RC will block the response of the server in case of the deflate example from above, saying Blocked by the HTTP security filter: the response content is encoded and cannot be scanned.

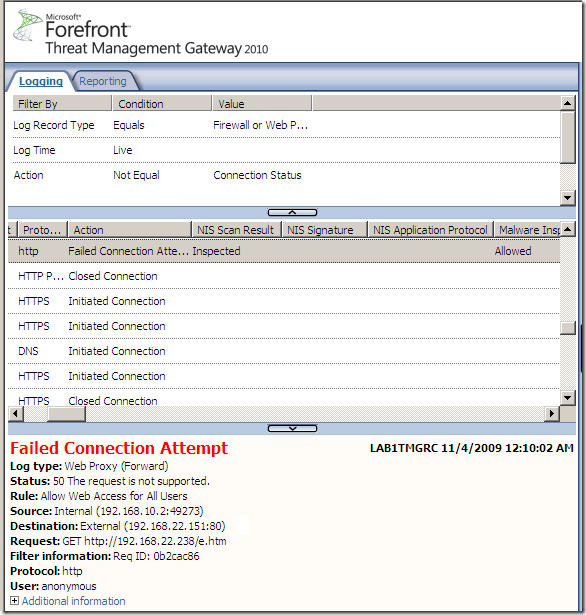

- there is a special case: when you disable the Compression Filter on Forefront TMG 2010 RC and the server forces HTTP compression. If so, the request attempt will fail with Status: 50 The request is not supported.

- the automation process by default: “I’ve not asked for it so don’t send it to me or I will drop it”. As we saw, there are several cases here to discuss:

- when one says it supports only gzip compression, and the server replies with something else.

- when one does not mention what type of HTTP compression supports(say because it does not support any), but the server uses compression within its response.

From a security point of view it would be desirable to automatically drop by default at the proxy level any responses using “compression” methods unsupported by the proxy to avoid any inspection engines evasion, given the fact that the web proxy is part of an UTM. This automates the process and lifts some weight from the admin in the security area.

The problem with this approach is that, in the real world, some web servers (mis)behave: replying with Content-Encoding: 8bit or with Content-Encoding: 0(just to mention a few). For example, e-banking.jtbank.cz through Forefront TMG 2010 RC(HTTPS inspection is on), replies with Content-Encoding: 8bit, as you can note Wireshark is confused too. If the proxy would just drop such responses, it needs to have a method of white listing the unsupported HTTP compression methods received in responses that are to be allowed, or have the admin to simply bypass the proxy for that web site. So the functionality aspect is affected, and some administrative weight is put on the admin to identify the source of the problem with such web sites and deal with them. And from the vendor’s point of view there might be one aspect of concern: the old story that a customer is rather happy with a “solution” that “just works”, although it may provide a lower level of security.

As can be noted from bellow, although the response contains the Content-Encoding: 8bit, the inspection engines on Forefront TMG 2010 RC(Malware Inspection and NIS) should be able to analyze the response body, as it’s not necessarily “encoded”, so if we bypass the proxy for this web site, we can actually decrease the level of security afforded for nothing.

You cannot have by default Forefront TMG 2010 RC to white list unsupported Content-Encoding schemes in rapport with certain web sites.

- if one sets the Content-Encoding HTTP header to something weird but it does not “encode” the response body in any way, it won’t be able to bypass the Malware Inspection and NIS on Forefront TMG 2010 RC, as the response body will still be scanned the way it is(and it’s “unencoded”). Such a “trick” will work only if the browser still parses the response body, but as said, it may be inefficient against the Malware Inspection and NIS on Forefront TMG 2010 RC.

- apparently, if you configure the HTTP filter to block a signature in server’s response body on the access rules or you disable the HTTP Compression filter, you may introduce a sort of a automation process, which denies any unexpected Content-Encoding headers by default.

SDCH (Shared Dictionary Compression over HTTP)

Google introduced some time ago Shared Dictionary Compression over HTTP(SDCH), which “proposes an HTTP/1.1-compatible extension that supports inter-response data compression by means of a reference dictionary shared between user agent and server.”

You can find on this page the link to the PDF document that describes it:

http://groups.google.com/group/SDCH

And here is the announcement of SDCH on IETF mailing list:

http://lists.w3.org/Archives/Public/ietf-http-wg/2008JulSep/0441.html

Jon Jensen put together a couple of links discussing SDCH:

http://blog.endpoint.com/2009/07/sdch-shared-dictionary-compression-over.html

What SDCH does, in simple words(assuming both the client and server support it and have “acknowledged” the support for it), is when dealing with responses that require the retransmission of the same data multiple times(header, footer, JavaScript and CSS) to use a dictionary(by both client and server, the server indicates the location from where the client can download this dictionary) and to store in this dictionary the repeating strings and then, in each applicable HTTP response to include references to the strings in the dictionary rather than repeating those strings.

SDCH is already used by Chrome or by the Google Toolbar in IE or Firefox(I haven’t used this so I can’t comment on it).

On the server side, Google’s servers support this, more exactly you may see it used with Google’s search engine:

Typically is used with gzip on the server side.

SDCH is applied first and then gzip, on the client the process is reversed.

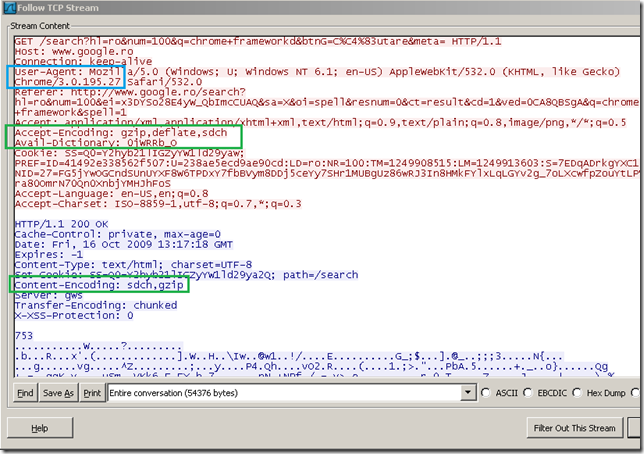

To quickly picture an example with Wireshark:

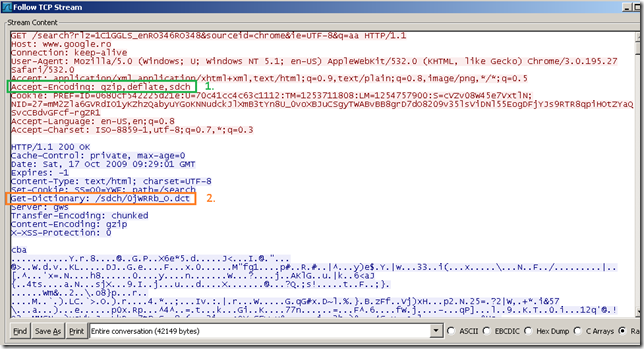

- the client presents the Accept-Encoding: gzip,deflate,sdch HTTP header. [1]

- the server sees the sdch from the client’s Accept-Encoding HTTP header, and indicates a dictionary that the client can use with the Get-Dictionary HTTP header(note that the server does not use SDCH yet as it has not also received the Avail-Dictionary: ‘dictionary-client-id’ HTTP header from the client) [2]:

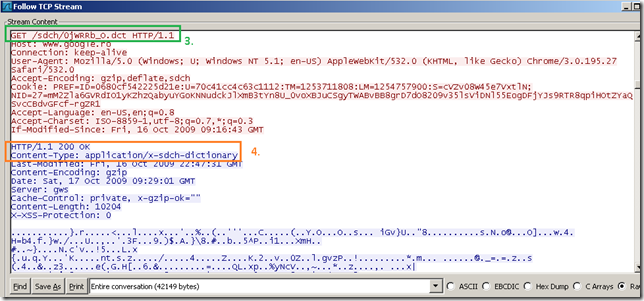

- the client downloads the dictionary [3] [4]:

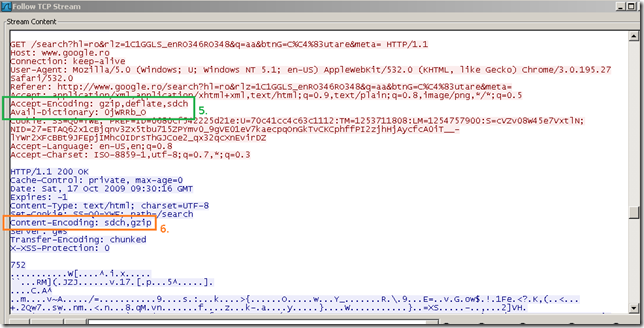

- next when the client makes a new HTTP request it presents the Accept-Encoding: gzip,deflate,sdch and Avail-Dictionary: ‘dictionary-client-id’ HTTP headers(so the server to be aware of the dictionary the client posses) [5].

- the server sees the sdch from the client’s Accept-Encoding HTTP header, the Avail-Dictionary: ‘dictionary-client-id’ HTTP header(it recognizes the dictionary advertised by the client), the request is not a HTTPS request, so it can return an encoded response [6], using a combination of SDCH + gzip, Content-Encoding: sdch, gzip.

We can reverse the process and using Wireshark and the open-vcdiff package made available by Google, manually decode the server’s response(this topic will walk you through):

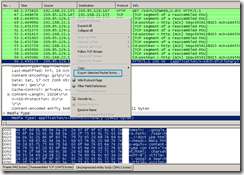

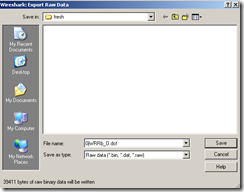

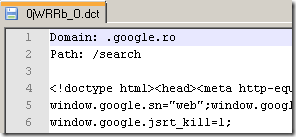

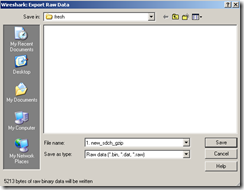

- export the dictionary from the Wireshark capture:

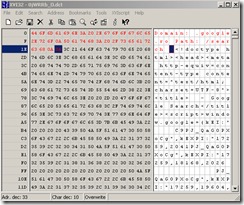

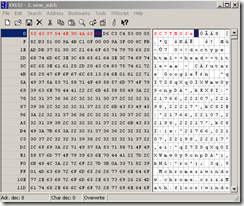

- remove with a hex editor(and not with a text editor, to avoid corrupting the dictionary) the ‘Domain:’ and ‘Path:’ header. Also remove the blank line too. And save the results(note that bellow I’ve marked with red the bytes that need to be removed from the dictionary).

- export the sdch-gzipped response from Wireshark:

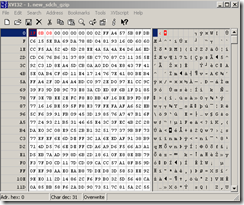

- ungzip with something the gzipped response(7-Zip can do that), note that bellow I’ve marked with red the bytes that indicated we are looking at a gzipped response(remember our discussion from here).

- remove the first nine bytes (the server ID and the terminating null character) from the ungzipped response(now only SDCH compressed response) with a hex editor(note that bellow I’ve marked with red the bytes that need to be removed from the response).

- use vcdiff(installed it on a Debian machine bellow) to manually decode the SDCH compressed response:

- with decode specify that vcdiff will decode the SDCH compressed response.

- with the –dictionary option specify the dictionary(edited as above).

- with the –delta option specify the SDCH compressed file(edited as above).

- with the –target option specify the file were the decoded response will be saved.

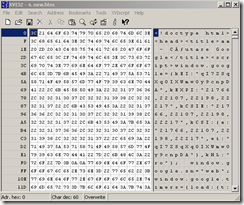

I’ve pictured bellow within a hex editor the decoded response.

vcdiff decode -dictionary 0jWRRb_O_edit.dct -delta new_sdch_edit -target new.htm

As a few words about SDHC:

- for the moment, as far as I saw, is used only on the Google’s web servers(to speed up your Google searches).

- the mod_sdch project for Apache does not currently show much activity, so it’s more difficult to play with it right now(which can be a good thing from certain points of view).

- Google Chrome is the only browser that has support by default for it. Other browsers may have support for it if you install the Google Toolbar on them.

- as writing, I’m not aware of any “hacking” related activities implying SDCH. As opposed, the trick with the forcing of gzip or deflate HTTP compression has been integrated into Metasploit long time ago.

- assuming one wants to force it on the server’s response, first it has to make the client download its dictionary. As we already said, simply striping the client’s Accept-Encoding header will not stop one from forcing compression on the server side. SDCH is no exception, based on the User-Agent, the server may recognize a client that should support SDCH, so in the first response the server can tell to the client with the Get-Dictionary HTTP header that a dictionary is available for download. The client may download it, and then may indicate with the Avail-Dictionary header the dictionary it posses. The proxy will strip one more time the unneeded Accept-Encoding header from this request, but the server can now “safely” reply with a SDCH compressed answer.

If you strip the Get-Dictionary header from server’s responses, it may be enough to stop the use of SDCH, but unfortunately, out-of-the-box you cannot do that on Forefront TMG 2010 RC.

You can block responses containing the Get-Dictionary header though. Or block responses containing in the Content-Encoding header ‘sdch’. A combination of blocking these two will be better. And maybe block requests containing the Avail-Dictionary header.

Bellow, for example, I’ve “forced”(sort of) SDCH compression through Forefront TMG 2010 RC between Google Chrome behind Forefront TMG 2010 RC and Google’s servers, Forefront TMG 2010 RC with the default settings(remember that by default Forefront TMG 2010 RC sets: Content-Encoding: gzip). I’ve cheated a little, as I’ve intercepted and modified on the fly the requests from Forefront TMG 2010 RC(Accept-Encoding header). The regular ‘unsupported compression method’ alert was logged, and the response was allowed through.